To ensure autonomous navigation capabilities, a robot must be equipped with both a localization mechanism and a representation of the environment (map).

Odometry alone, based on wheel rotation measurements, is not sufficient, as it introduces cumulative errors that increase over time.

Therefore, a map is required to serve as an external reference, enabling the correction of these errors and allowing the robot to determine its position with greater accuracy.

In robotics, a map is a computational representation of the real environment. It is not a perfect copy, but a simplified, symbolic version that enables a robot to:

localize itself,

move autonomously,

avoid obstacles,

reach goals.

The level of detail of a map directly affects:

the accuracy of localization,

the computational cost for processing and usage,

the memory required for storage.

Therefore, the designer must always seek a balance between accuracy and efficiency.

In robotic systems, maps can be categorized according to their structure, level of abstraction, and purpose. The following sections present the principal types of maps used in autonomous navigation, organized in a systematic and technical manner.

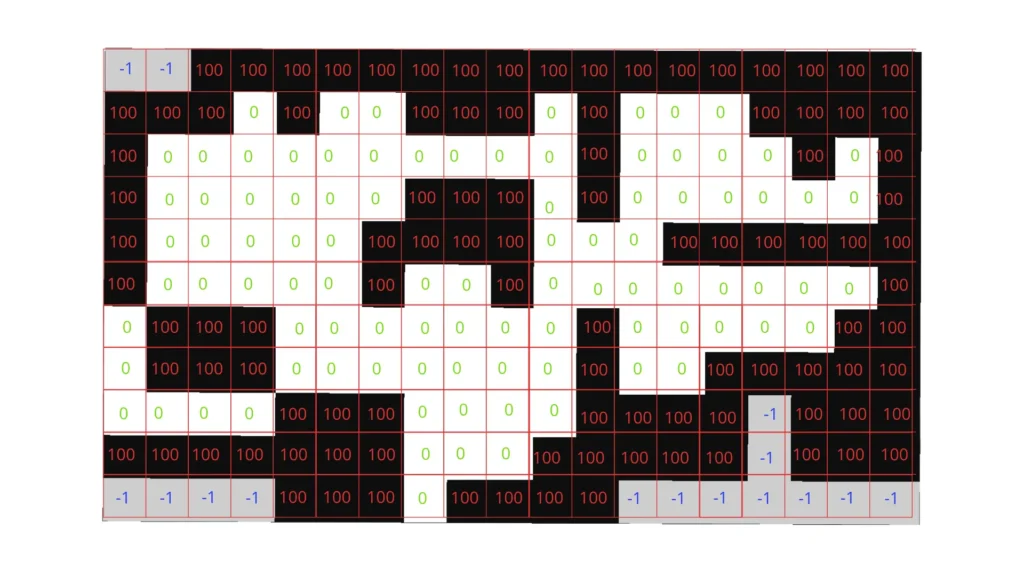

Metric maps, commonly implemented as occupancy grids, represent the environment through a discretized two-dimensional grid. Each grid cell encodes the probability of being free, occupied, or unknown, enabling the robot to distinguish navigable areas from obstacles.

This representation is computationally efficient, relatively lightweight, and has therefore become a standard in mobile robotics.

Occupancy grids are particularly well suited for path planning and obstacle avoidance, and are typically generated using SLAM (Simultaneous Localization and Mapping) techniques, for example within ROS 2 using the nav2 framework.

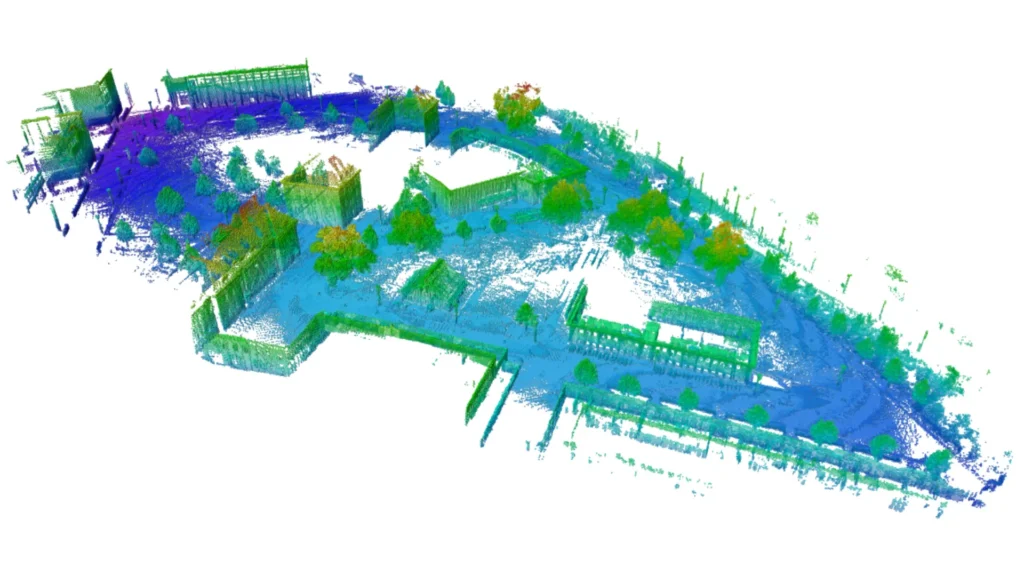

Three-dimensional geometric maps extend metric representations into volumetric space. They may be constructed as point clouds, polygonal meshes, or hierarchical voxel structures such as OctoMaps.

These maps provide a detailed reconstruction of the environment, including elevation and overhanging obstacles, making them indispensable for aerial drones, legged robots, and autonomous vehicles operating in complex, unstructured environments.

While they offer a high degree of accuracy, their generation and processing impose significant computational and memory demands, requiring advanced hardware and optimized algorithms for real-time usage.

Topological maps describe the environment as a graph structure, in which nodes correspond to relevant locations (e.g., rooms, hallways) and edges represent the navigable connections between them.

Unlike metric maps, they do not encode geometric precision but rather emphasize the relational structure of the environment.

Their compactness makes them suitable for high-level planning and efficient storage, allowing robots to reason in terms of connectivity rather than detailed geometry.

However, their abstraction limits their use in fine-grained navigation tasks where precise obstacle information is required.

Hybrid maps combine elements of both metric and topological representations, sometimes enriched with semantic information. For instance, a robot may rely on a global topological structure for efficient long-range planning while simultaneously maintaining local occupancy grids for precise navigation and obstacle avoidance.

In more advanced implementations, hybrid maps also integrate semantic labels, allowing robots not only to navigate but also to interpret their surroundings (e.g., identifying a space as a “kitchen” or “office”).

This layered approach provides a powerful balance between computational efficiency, accuracy, and contextual understanding, making hybrid maps an increasingly common choice in modern robotic systems.

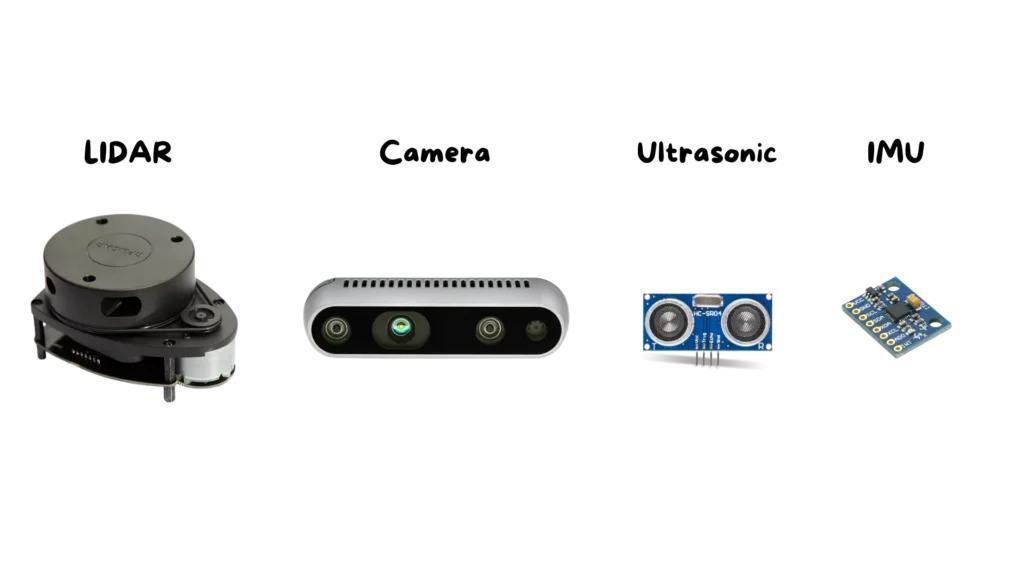

Robots rely on sensors to perceive and model the environment, since odometry alone is not sufficient for accurate localization. Each sensor provides different types of information, and understanding their characteristics is essential to determine which technology best suits a specific application.

LIDAR sensors are among the most common in robotics for mapping and localization tasks. They operate by emitting laser beams and measuring the time of flight of the reflected signal, allowing them to calculate distances with high accuracy.

This makes them particularly effective for generating two-dimensional occupancy grid maps, widely used in mobile robots.

Their main advantages are precision and robustness, although they tend to be expensive and may have limitations in vertical coverage in 2D models.

Cameras are another fundamental sensor for robotic perception. Traditional RGB cameras capture visual information, providing images rich in color and texture, while RGB-D cameras integrate depth data, combining visual detail with three-dimensional structure.

These sensors enable robots to build dense 3D maps, recognize objects, and even associate semantic meaning with elements of the environment. Their flexibility makes them highly valuable, but they are sensitive to lighting conditions and can require significant computational resources to process images in real time.

Ultrasonic sensors are based on the emission of high-frequency sound waves and the measurement of the returning echo. They provide approximate distance estimates, especially useful for detecting nearby obstacles.

Their main strengths are their low cost, simplicity, and small size, which make them suitable for integration in small robots or as complementary safety sensors. However, their accuracy is limited, and the measurements are often noisy, restricting their use to basic collision avoidance rather than precise mapping.

Inertial Measurement Units (IMUs) and odometry provide motion-related data. IMUs measure accelerations and angular velocities, while odometry estimates displacement based on wheel rotations.

Together, these sensors give the robot an immediate sense of movement and orientation, which is essential for short-term navigation.

However, both are subject to cumulative errors over time: odometry suffers from wheel slippage and drift, while IMU signals can degrade due to integration errors.

For this reason, they are typically combined with external sensors like LIDARs or cameras to ensure consistent long-term localization.

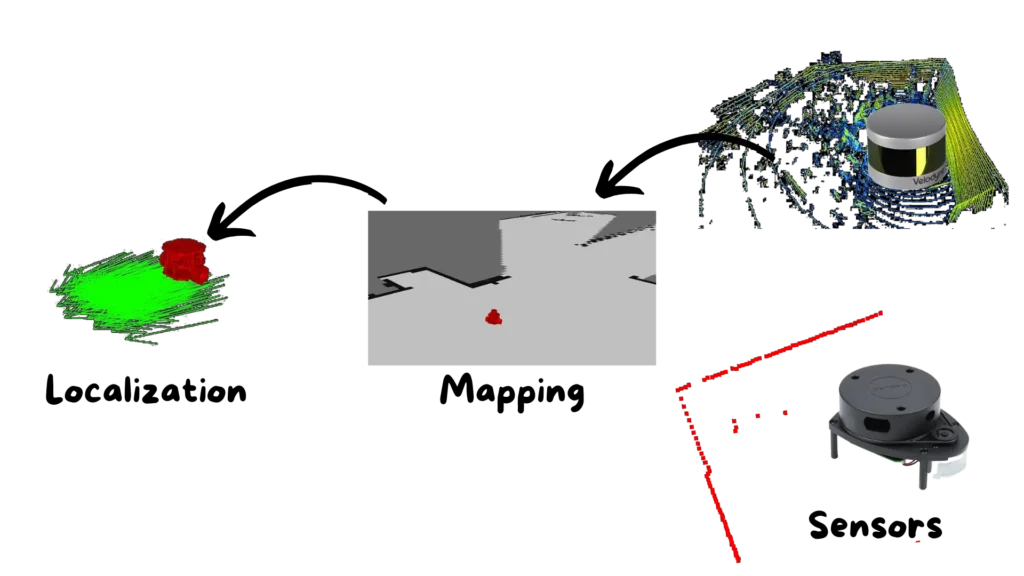

In autonomous robotics, localization is the result of a tightly coupled process that links sensors, maps, and position estimation. This relationship can be expressed as follows:

The sensors acquire raw data from the environment, such as distances, images, or motion parameters.

These data are processed and structured into a map, which provides a computational model of the environment.

The map serves as a reference, enabling the robot to calculate its position and orientation within the environment.

This chain illustrates how each stage depends on the previous one: without sensors, no map can be built; without a map, localization becomes unreliable.

Practical examples include:

Using a topological map, a robot can recognize that it is located in a specific area (e.g., the kitchen), but it cannot determine exact metric coordinates.

With a 2D occupancy grid, the robot can estimate its position in metric terms, such as (x = 3.2, y = 5.1), which is essential for precise path planning.

With a 3D map, the robot gains the ability to navigate complex environments that include elevation and overhanging obstacles, extending its autonomy to fully three-dimensional spaces.

We have seen how maps represent the essential bridge between raw sensor data and a robot’s ability to move autonomously. Without an adequate map, localization remains inaccurate; with overly complex maps, computations become unmanageable in real time. Only by finding the right balance between sensor quality and map type can a robot safely interact with its environment.

This is just a preview of what you will learn: in the full course you will find in-depth explanations, practical examples, ready-to-use code, and all the material you need to systematically build the skills that will allow you to design and implement autonomous robotic systems.

Assemble your robot and get started to learn Robotics!