When robots and humans share the same workspace, functional safety becomes a first-class requirement. A collaborative robot must be able to adapt its motion according to the level of risk in the environment, slowing down or stopping entirely when a person comes too close.Unlike traditional setups with cages or physical barriers, this approach relies on software and sensing to ensure safety.

The principle is realized through Speed and Separation Monitoring (SSM), a continuous process that evaluates the minimum distance between the robot and surrounding objects—commonly using a 2D LiDAR—and translates this measurement into safe motion commands.

This article explores how SSM works conceptually and how it can be integrated into a ROS 2 stack, where the twist_mux node plays a key role in arbitrating velocity commands from multiple sources such as navigation, teleoperation, and safety.

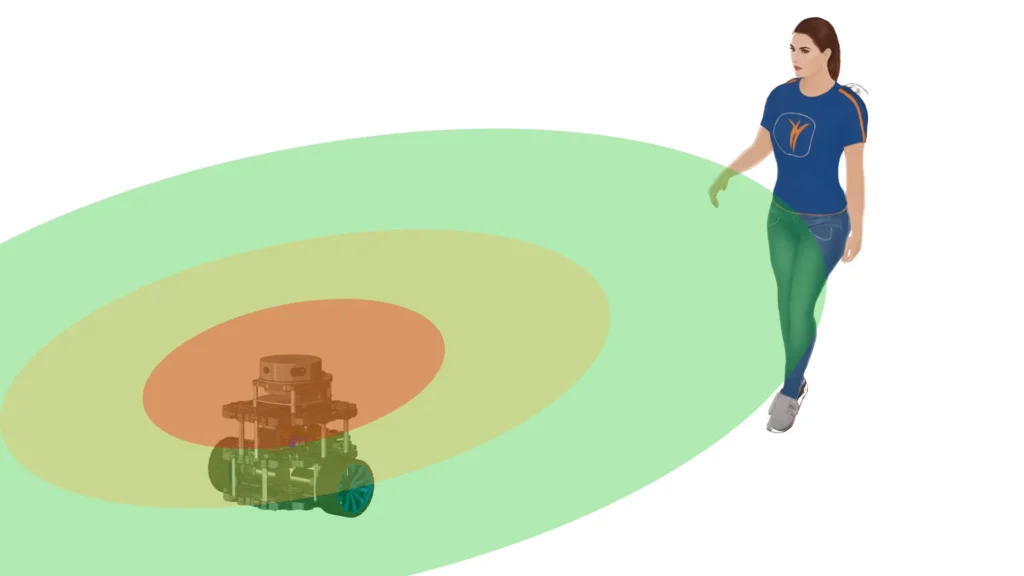

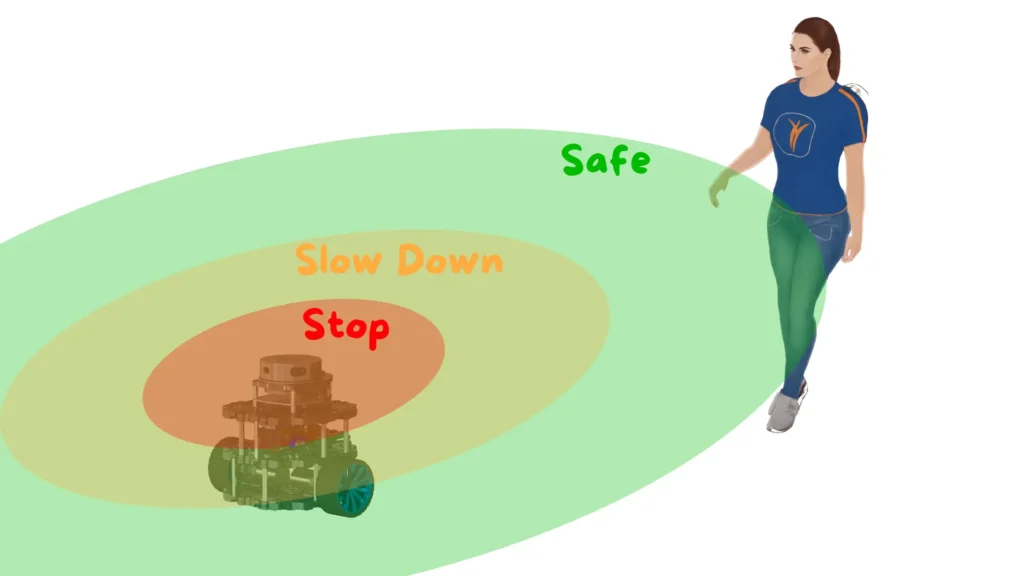

The foundation of SSM is the definition of concentric safety zones around the robot, derived from the LiDAR’s field of view. These zones translate distance into behavior: when no obstacles are present, the robot moves freely; when something enters a warning zone, the robot reduces its speed; and when an object crosses into the danger zone, the robot halts immediately.

The LiDAR continuously provides range measurements across its angular span. Each scan is analyzed to extract the minimum valid distance, and this value is compared to the predefined thresholds. If the closest object lies within the warning zone, the robot transitions into a reduced-speed behavior. If it falls inside the danger zone, the system enforces a complete stop. This cycle repeats at the sensor’s update frequency, ensuring that the robot’s speed always reflects the real-time level of risk.

Two aspects are particularly critical. The first is the detection geometry and coverage: the accuracy of SSM depends entirely on what the LiDAR can “see,” which is influenced by its angular resolution, field of view, and any occlusions. The second is the reaction characteristics of the system: the update rate, filtering methods, and debouncing must allow fast responses to sudden approaches, while also avoiding unstable or noisy triggers.

The perception stage begins with the LiDAR scan, which produces an array of distances covering a 2D plane. The SSM logic processes this data to identify the closest object, either across the full scan or within a defined sector depending on the application. Because raw LiDAR data is prone to noise, the system typically applies validation steps to discard invalid values and lightweight filtering—such as temporal averaging—to stabilize decisions. This ensures the output is not overly sensitive to random fluctuations. The result of this process is a risk state that can be categorized as clear, warning, or danger.

Once the risk state is determined, the system maps it directly to robot behavior. In a clear state, navigation or teleoperation commands pass through unchanged. In a warning state, the velocity commands are attenuated according to predefined limits, reducing both linear and angular speed. If the state escalates to danger, the system overrides any input and sets the velocity to zero, ensuring an immediate stop.

This mapping must be deterministic and monotonic, meaning that as an obstacle gets closer, the robot’s behavior always becomes more restrictive. To prevent oscillations near threshold boundaries, a simple hysteresis mechanism is often introduced.

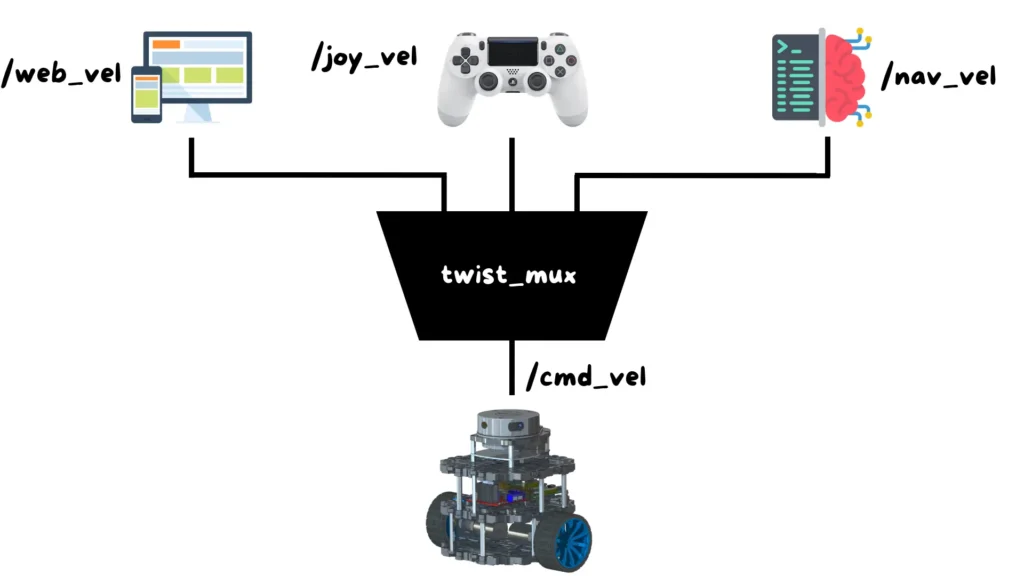

twist_mux is essential in ROS 2Modern robots usually receive velocity commands from multiple sources. For example, navigation may propose a trajectory, an operator may teleoperate the robot, and a safety system may inject protective overrides. These commands are all expressed as geometry_msgs/Twist, and without proper arbitration, they would conflict.

The twist_mux node provides the necessary arbitration by assigning priorities to each input. In practice, navigation might run at a lower priority, teleoperation higher, and safety at the top. If multiple inputs are active, the one with the highest priority is selected.

Additional mechanisms improve robustness: timeouts ensure that if a source stops publishing, its commands are ignored, preventing stale data from being applied; lock topics can override all other inputs, enforcing an emergency stop regardless of current priorities. This design guarantees that the safety channel always prevails, without the need for direct intervention from the navigation or teleop nodes.

In a real ROS 2 setup, the integration of SSM follows a clear flow. First, the LiDAR publishes its scans. The SSM logic processes them, computes the minimum distance, and determines the current risk state. Based on that, it publishes either a reduced velocity or a stop command on the safety channel. The twist_mux node then receives this input alongside navigation and teleop commands, and—using its configured priorities and timeouts—decides which command to forward to the robot.

Because every system can have different topic names and policies, it is important to align the configuration with the twist_mux settings already defined in your project. The priorities, timeouts, and lock behaviors must reflect the intended safety policy.

Before enabling SSM in environments where humans are present, thorough testing is essential. The first step is verifying sensor coverage, ensuring the LiDAR truly monitors the critical zones without blind spots. Next, the warning and danger thresholds must be validated empirically to match the robot’s braking distance, system latency, and expected human approach speeds. The transitions between states should be observed to confirm that they are deterministic, repeatable, and free from unstable oscillations. Finally, the arbitration behavior must be checked to confirm that twist_mux correctly prioritizes safety overrides and seamlessly restores normal operation once conditions are safe.

These validation steps ensure that the SSM configuration is not only theoretically correct but also practically reliable.

Speed and Separation Monitoring provides a practical, effective way to keep people safe around mobile robots by continuously adapting the robot’s motion to the measured risk. Leveraging a 2D LiDAR for distance assessment and twist_mux for command arbitration gives you a robust, ROS 2-native solution that preserves productivity without compromising safety.

If you are interested in this topic and want to explore the full design of safe and autonomous robotic systems with ROS 2, the complete course includes dedicated lessons, practical examples, and ready-to-use material that will allow you to apply these techniques directly to your projects.

Assemble your robot and get started to learn Robotics!